In system design data flow serves as an essential concept for understanding the inner workings of digital architectures. It describes the pathways through which information moves within systems, ensuring smooth communication between different components. Just as the circulatory system in the human body transports oxygen and nutrients, data flow facilitates the transmission of information, enabling software and hardware to function effectively. Monitoring dataflow status is crucial for ensuring the efficiency, reliability, and security of digital infrastructures. In this article, we will explore the fundamentals of data flow, its significance, how to monitor its status, and best practices for optimizing dataflow management.

What is Dataflow Status?

Dataflow status refers to the real-time condition of data as it moves through a system. It helps in understanding whether data is being processed efficiently, where delays might occur, and whether there are any failures or bottlenecks in the system. Dataflow status is widely used in various applications, including cloud computing, network management, and real-time analytics.

Importance of Monitoring Dataflow Status

Monitoring dataflow status ensures that systems operate efficiently and without interruption. Some key reasons why monitoring data flow is important include:

- Performance Optimization: Identifying slow-moving or blocked data paths helps in improving system speed and responsiveness.

- Security Enhancement: Detecting unauthorized data access or unexpected data movements helps prevent cyber threats.

- Error Detection: Helps in troubleshooting errors related to missing or corrupted data packets.

- Resource Allocation: Optimizes the use of computational and network resources by identifying underutilized or overloaded pathways.

Key Components of Data Flow

Dataflow systems are composed of several components that work together to ensure smooth data movement. Some of the key components include:

1. Data Sources

These are the origins of the data, such as databases, APIs, sensors, or user inputs.

2. Data Processors

Processing units that transform, analyze, or store data. These may include cloud servers, local computing devices, or artificial intelligence models.

3. Data Storage

Temporary or permanent locations where data is stored, such as SQL databases, cloud storage, or distributed file systems.

4. Data Transmission Channels

The pathways through which data travels, such as network protocols, pipelines, or data buses.

5. Data Consumers

Endpoints where data is used or displayed, such as applications, dashboards, or analytics systems.

How to Monitor Dataflow Status

Effective monitoring of dataflow status requires robust tools and methodologies. Here are some of the best practices for tracking data movement within systems:

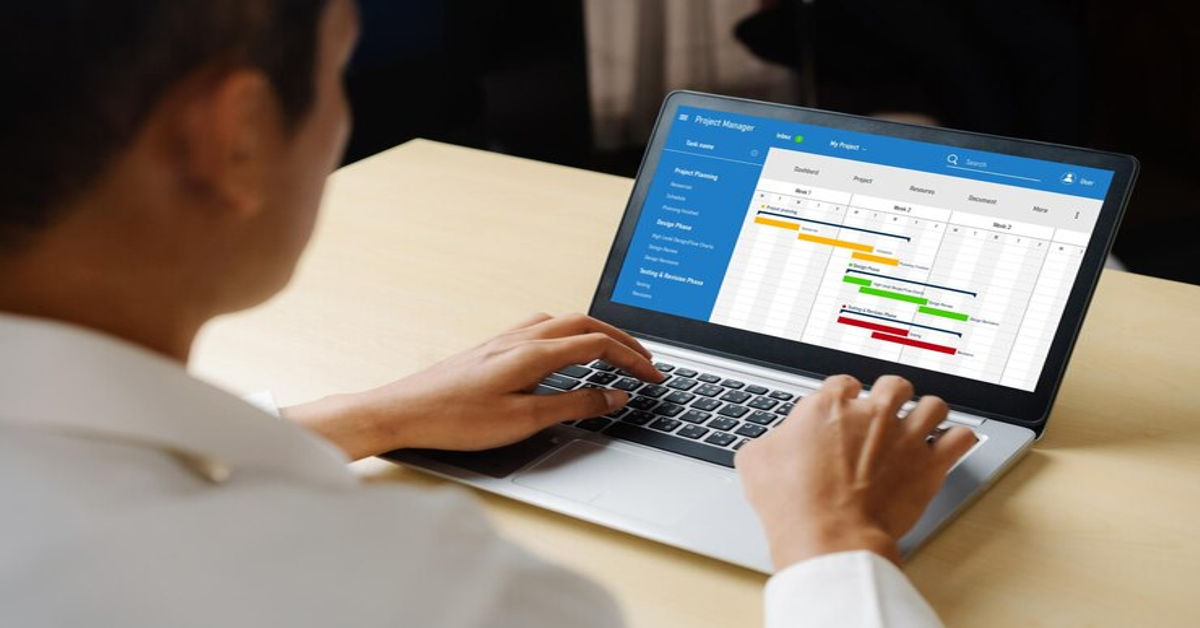

1. Use Data Flow Visualization Tools

Software such as Apache NiFi, Google Cloud Dataflow, and Microsoft Azure Data Factory provide graphical representations of data movement and help in identifying bottlenecks.

2. Implement Logging and Alerts

Logging data movement events and setting up alerts for anomalies can help detect failures early.

3. Leverage AI-Based Analytics

Machine learning models can analyze data patterns to predict failures and recommend optimizations.

4. Monitor Network Traffic

For systems that rely on network data transmission, monitoring tools like Wireshark and SolarWinds can provide real-time insights into data packet movements.

5. Ensure Redundancy and Failover Mechanisms

Redundancy mechanisms ensure that data can be rerouted in case of failures, minimizing downtime.

Common Dataflow Issues and Solutions

Dataflow systems are prone to several issues that can impact performance and reliability. Below is a table highlighting common problems and their solutions:

| Issue | Cause | Solution |

| Data Bottlenecks | Overloaded processing units | Distribute workload using cloud computing |

| Data Loss | Network failures or hardware issues | Implement backup and failover mechanisms |

| Slow Data Transmission | Poor network infrastructure | Upgrade network bandwidth and use optimized protocols |

| Security Vulnerabilities | Unencrypted data movement | Use end-to-end encryption and access controls |

| System Downtime | Software bugs or hardware failure | Deploy automated recovery and redundancy systems |

Best Practices for Optimizing Dataflow

To ensure optimal performance and security of data flow, consider the following best practices:

- Optimize Data Processing Pipelines: Use parallel processing and load balancing to improve data throughput.

- Implement Caching Mechanisms: Reduce unnecessary data requests by caching frequently accessed data.

- Regularly Audit Dataflow Logs: Identify inefficiencies and security vulnerabilities through log analysis.

- Utilize Cloud-Based Dataflow Solutions: Cloud platforms offer scalability and fault tolerance for handling large volumes of data.

- Adopt Standardized Protocols: Ensure compatibility and security by using standard data exchange protocols such as HTTP, MQTT, or WebSockets.

Conclusion

Understanding dataflow status is crucial for maintaining efficient and secure digital systems. By implementing monitoring tools, optimizing data pipelines, and adopting best practices, organizations can improve performance and minimize risks. Whether in cloud computing, software development, or network management, maintaining a seamless data flow ensures better decision-making and overall system reliability.

For businesses and IT professionals, staying updated with the latest advancements in dataflow technologies is key to ensuring robust and scalable infrastructures. Keep exploring new tools and methodologies to enhance your data management strategies.

FAQs

1. What is the primary purpose of dataflow monitoring?

Dataflow monitoring helps track data movement within a system, ensuring efficiency, security, and real-time troubleshooting of issues.

2. How can I check the status of my dataflow?

You can use visualization tools like Google Cloud Dataflow, Apache NiFi, or AWS Data Pipeline to monitor real-time data movement.

3. What are some common indicators of dataflow issues?

Slow data processing, increased error rates, missing data, and network congestion are common indicators of problems in data flow.

4. Can AI improve dataflow efficiency?

Yes, AI can analyze data movement patterns, predict failures, and optimize resource allocation for improved performance.

5. What security measures should be implemented for secure dataflow?

Use encryption, multi-factor authentication, firewall protections, and continuous monitoring to secure data transmission and storage.